AI is a political topic - and it concerns you too

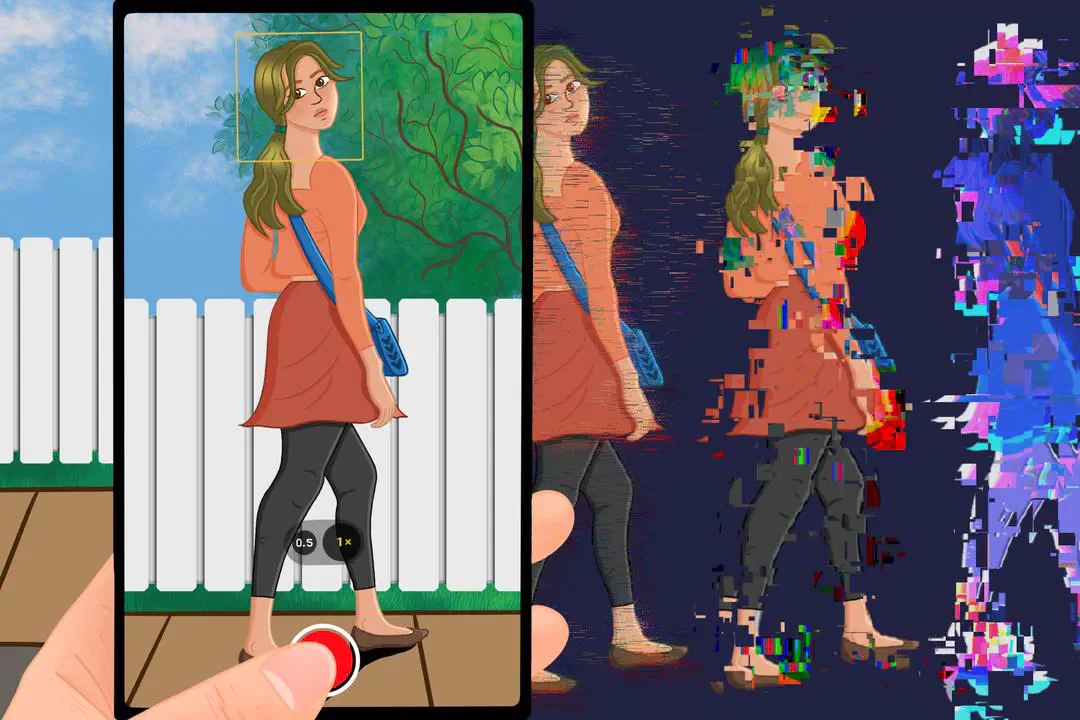

Reihaneh Golpayegani / https://betterimagesofai.org / https://creativecommons.org/licenses/by/4.0/

Reihaneh Golpayegani / https://betterimagesofai.org / https://creativecommons.org/licenses/by/4.0/

Note : two versions of this article have been created and published, one in Swedish published in Nerikes Allehanda, and one in Frence published in Le Club Mediapart. Note that this post is not an exact translation of these two articles but the original English version. The final articles have been shortened to fit the other newspapers format.

–

We are being influenced by non-human entities that imitate humans, infiltrate human society, working on behalf of unknown organizations. Our opinions, our actions, our lives are being influenced by these entities. They are everywhere, in our home, at work, in our hospitals. This is not the plot of a new Hollywood movie, nor some conspiratory rambling about a secret alien society. This is our world since the popularization of Artificial Intelligence (AI).

Even though the entire world started to talk about Artificial Intelligence a bit more than 2 years ago with the release of ChatGPT, AI has been with us for much longer than that. AI systems have been introduced in our lives with or without our knowledge and consent. Netflix or Spotify’s recommendation algorithms, who suggests you the next movie to watch or a nice playlist to listen to, are everyday examples. But more algorithms are impacting our lives than we think. Did you know that your mortgage request might have been granted by an AI system? Or that your resume might have been rejected by an AI? Or that the photo that stirred your emotion on the news outlet you read yesterday was AI generated?

We are being exposed to AI, whether we want it or not. And AI is not neutral. People create or use an AI system to solve a problem. This could be too many mortgage requests or resumes to go through for one person, the difficulty to find the right photo to reflect the tone of the article or even the intention to manipulate the reader’s emotion. But how the system actually ends up working depends on a social context : what the solution is supposed to look like, the data that is used to create this solution, the values that the system’s creator wants to uphold. And creators don’t always anticipate all the consequences of their choices.

Algorithmic bias is the concept that an algorithm systematically creates unfair outcomes, privileging one group of people over another, usually in ways that are not the intended function of the algorithm. Common examples are Amazon’s recruiting tool that discriminated against women, facial recognition algorithms that do not differentiate between different black persons, or medical systems that underestimate the medical needs of minorities. Algorithmic bias can have multiple causes. Often people will attribute this to data : if the data is biased, the system will be too. While this is true, it would be a mistake to think that data is the only culprit. For instance, in the United State, hospitals have been using an AI system to help them decide whether an incoming patient should be sent to the emergency room or not based on their medical history. Understanding the medical history of a patient is a very complex problem, and the developers of the algorithm made the assumption that people with a severe medical history will spend more money on healthcare than others. They therefore used the amount of money previously spent on healthcare as a substitute for medical history. However, due to socio-economical reasons, black Americans spend on average less money on healthcare than white Americans with similar needs. Therefore, the algorithm systematically underestimated black American’s medical needs. Data itself is not the issue here. It is important to note that algorithmic biases go much further than sexist or racist biases. For instance, researchers have been studying linguistic biases in ChatGPT and demonstrated that it is stereotyping dialects, which made users of these dialects feel uncomfortable and insulted. Cultural biases or misrepresentations are other issues associated to AI systems.

Why does it matter? After all, if we know these systems are biased, can’t we check the output ourselves and make our own mind? Unfortunately, it is not that simple. We all have a tendency to favor decisions made with automation compared to decision made without. This is called automation bias, and is well studied in psychology. This means that when AI systems present us with a solution, we are naturally inclined to adopt this solution. Yes, this applies even if you are aware that automation bias is a thing. Furthermore, researchers have also shown that using AI systems directly impacts our opinions. In a study, a language model was programmed to promote a specific opinion towards social media. Participants who used this model wrote pieces that followed the promoted opinion, and even had their own stance towards social media influenced by the model. This is a frightening find. More and more text is being written with the help of ChatGPT and other AI assistants, and we have no control over the opinions programmed in them. Beyond text, AI systems are creating images, moderating content, and generally following rules and algorithms that we have no control over and belong to a handful of very rich companies. What we see, what we read, what we and our children take as role models. All of that is being fed to us by AIs, and we have no say in it.

Or do we?

AI must become a citizen concern. As we vote about economy, environment, social policies, we must also vote about AI, its development and its adoption. We don’t need to become AI experts any more that we needed to become geopolitical experts, or financial experts. However, we have a duty to educate ourselves, to understand who is talking about AI on the world’s stage and wonder about the consequences of what they are saying. We must reflect on what we want and how we want it to be. AI is now, more than ever, political. We cannot remain the passive observers of what is happening to us. We can and must have a say in it.